You’ve probably noticed it before. You’re deep into a productive conversation with an AI, getting great responses, when suddenly everything goes sideways. The assistant starts ignoring your instructions, contradicts what it said ten messages ago, or just seems... off.

Here’s what’s actually happening: you’ve run out of memory space.

Understanding AI Memory

Think of AI conversations like working on a desk. There’s only so much surface area available. Every message, every file you share, every response—it all takes up space. When the desk gets full, something has to go, and it’s usually the oldest stuff that gets pushed off first.

This isn’t a bug. It’s how these systems work by design.

What Gets Counted

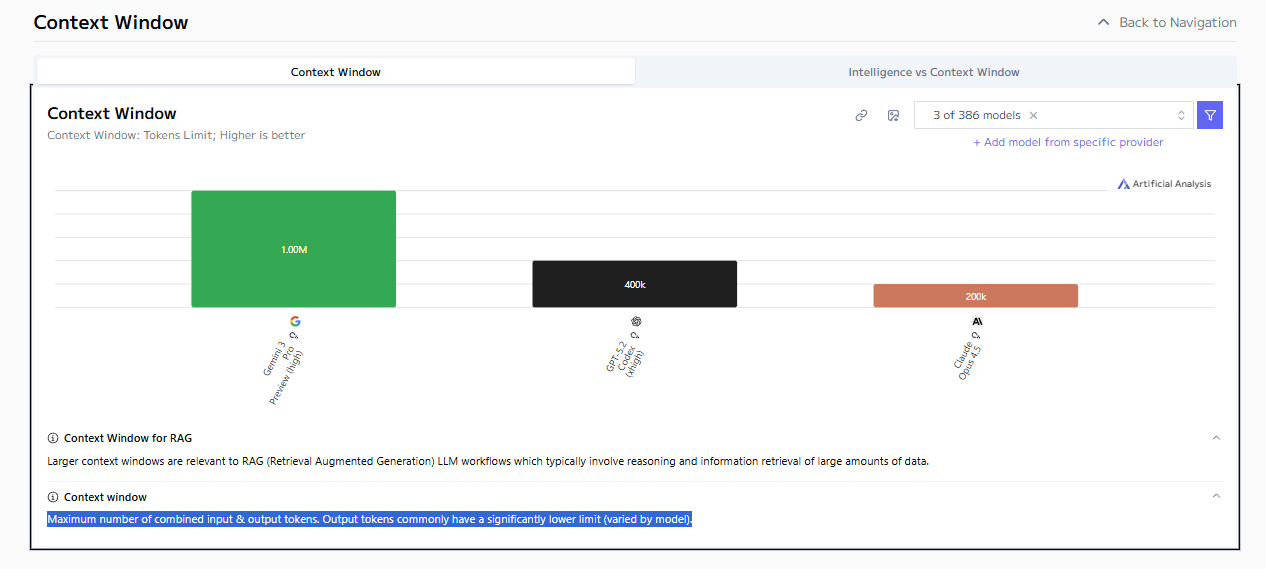

AIs measure memory in “tokens”—essentially chunks of text. A rough guideline: 100 words typically equals around 130 tokens. Different models handle different amounts:

ChatGPT (in the web interface): Handles roughly 45,000-60,000 words before things get tight

Claude: Can work with around 150,000 words effectively

Gemini Pro: Has massive capacity—up to 750,000 words or more

The Performance Sweet Spot

Here’s something counterintuitive: just because a model can handle a million tokens doesn’t mean it should. Quality tends to peak when you’re using about 30-60% of available capacity. Push past 70%, and you’ll notice degradation—slower responses, less accuracy, more mistakes.

Warning Signs Your Context Is Full

Your instructions vanish. You asked for bullet points in message one. By message twenty, you’re getting paragraphs.

Contradictions appear. The AI recommended Strategy A earlier, now it’s pushing Strategy B without acknowledging the shift.

Facts get fuzzy. You mentioned a $9,000 budget. Now it’s referencing $6,500. Where’d that number come from?

Claude users: If you see “Organizing thoughts...” or “Compacting conversation,” that’s Claude telling you memory is tight.

Practical Solutions

The Clean Slate Approach

When you hit around 60% capacity (usually 15-20 messages in a typical conversation), try this:

Ask the AI: “Can you summarize what we’ve covered, what we’ve decided, and what we’re working on next?”

Copy that summary.

Start a fresh conversation: “Here’s where we left off: [paste summary]. Let’s continue.”

You get a clean workspace while keeping the essential context.

Be Strategic About Files

Different file types eat memory at different rates:

Plain text files are lightweight

PDFs with just text are moderate

Images and complex spreadsheets cost more

Videos are expensive

Before uploading a 100-page document, ask yourself: do I need all of it? Extract relevant sections instead.

Build Awareness

Pay attention to when quality drops. After a few projects, you’ll develop intuition for your limits. Keep mental notes:

What types of tasks filled up memory fastest?

At what point did responses start degrading?

Which model handled your specific use case best?

Mid-Conversation Resets

Don’t want to start over? Ask for a summary every 10-15 messages. This creates checkpoints that help the AI refocus on what matters.

Common Mistakes

Pushing too hard. “Just one more question” at 80% capacity turns into five more questions and unusable output.

Dumping everything upfront. Uploading five documents at once burns your workspace before you’ve asked anything useful.

Ignoring the signals. When something feels off, it probably is. Don’t power through—refresh instead.

The Real Skill

The best AI users don’t have marathon 50-message sessions. They have focused 15-message sprints, then reset. They’re selective about what they include. They stop before quality drops, not after.

Your job isn’t just to ask questions. It’s to manage the workspace—keep it clean, focused, and effective.

Try it. Next time you’re deep in a conversation and things start feeling strained, pause. Summarize. Start fresh. You’ll be surprised how much sharper the responses become.