Hey —

I keep coming back to this question. Not because it’s new — people have been asking it for years — but because the answer is shifting faster than most firms can keep up. What actually happens to the role of a financial analyst once AI is genuinely capable of doing the work we used to call “the work”?

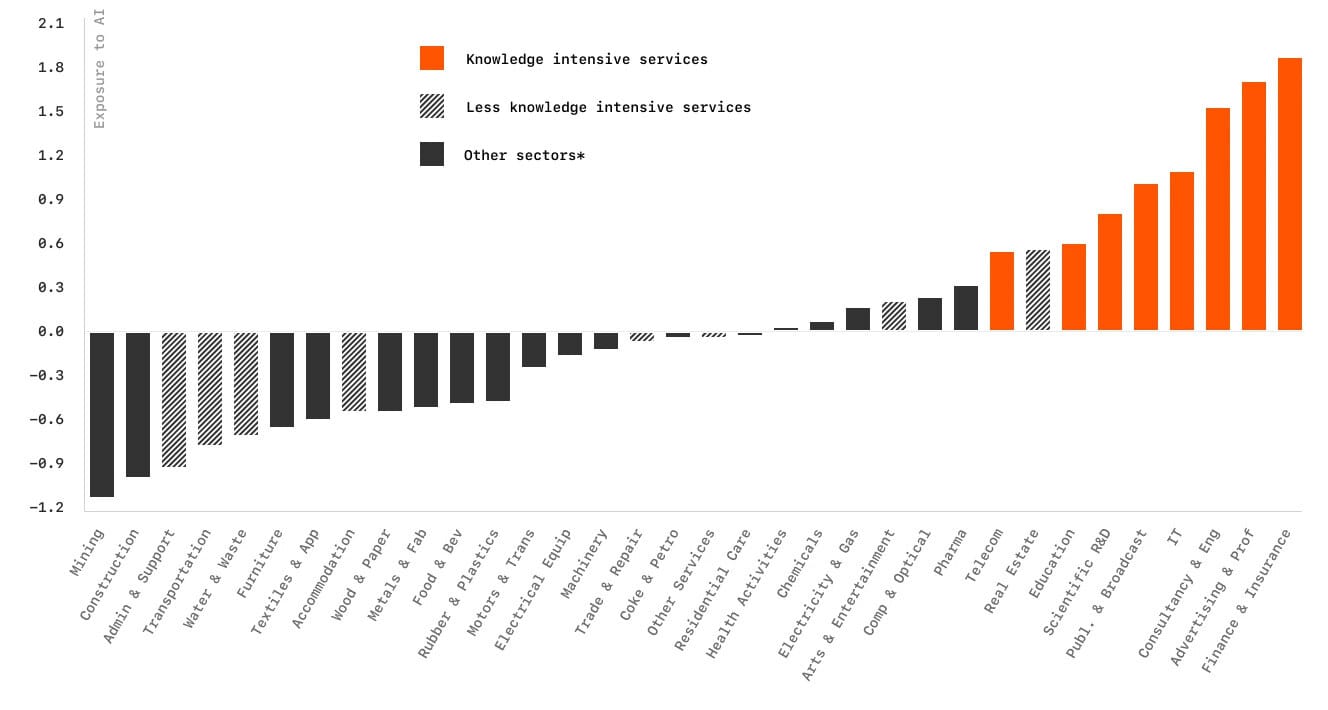

The headlines make it simple: AI replaces jobs, juniors go first. The reality is more complicated. AI is redefining what counts as core work, and that redefinition moves up the org chart. Some things that used to be high-value are now baseline. Skills that were once niche are table stakes. The places where analysts add irreplaceable value shift closer to decision and strategy — whether you’re in investment banking, corporate FP&A, or on the buy side.

If you’re in a senior role, you’ve probably felt it already. Models get built faster. Prep cycles shrink. But that’s just the surface. The bigger change is in how analyst time gets allocated and what you can reasonably expect from them.

CFA Institute recently put that to the test. Six leading AI models went head-to-head with seasoned equity analysts to produce SWOT analyses, and the results were hard to ignore. In some cases, the AI didn’t just keep up—it spotted risks and strategic gaps the humans had missed. This wasn’t a thought exercise. They ran a controlled comparison on three “market darlings” as of February 2025: Deutsche Telekom in Germany, Daiichi Sankyo in Japan, and Kirby Corporation in the US. Each was the most positively rated stock in its region, heavily endorsed by analyst consensus.

They chose them precisely because they were consensus favorites. If an AI can surface weaknesses in companies everyone thinks are bulletproof, that’s not just useful—it’s a sign the tech can challenge groupthink and change how research is done.

And here’s the part that should make you pay attention: with the right prompts, some models beat human analysts for specificity and depth. That doesn’t mean the humans are obsolete—AI can’t read the room in a management meeting or hear the subtext in “cautiously optimistic” guidance. But it does mean that synthesis, pattern-matching, and surfacing blind spots are now very much in the AI wheelhouse.

The other takeaway: prompting matters more than most people think. In our test, moving from a vague “Give me a SWOT on Deutsche Telekom” to a detailed, structured prompt improved the output by up to 40 percent. That’s the difference between a generic overview and something that reads like institutional-grade research. Prompting is becoming the new Excel—a base skill that separates competent from great.

Not all models are equal, either. In their run:

Google Gemini Advanced 2.5 (Deep Research) came out on top.

OpenAI o1 Pro was a close second, with strong reasoning.

ChatGPT 4.5 was solid but clearly behind the leaders.

Grok 3 showed promise but still inconsistent.

DeepSeek R1 was fast but less polished.

ChatGPT 4o was the baseline.

The reasoning-optimized models—the ones built for “Deep Research”—consistently outperformed their general-purpose counterparts. More context, better fact-checking, fewer generic statements. It’s like the difference between a senior and a junior hire. Both can get the job done, but one needs a lot less oversight. And just like humans, the models that took longer to think—10 to 15 minutes instead of under a minute—delivered better work.

Is AI Really Better at Predicting Earnings?

Yes. One academic study compared GPT-4 with human analysts using data from Compustat and IBES. The result: GPT‑4, with a chain-of-thought prompt, achieved 60.35% accuracy in forecasting the direction of future earnings, versus 52.71% for human analysts. The F1‑score followed a similar pattern, favoring GPT‑4.

Across multiple sources, that same ~60% vs low‑50% result repeats. So yes, unguided prediction shows AI is ahead in raw accuracy. But those scenarios lacked context—no qualitative inputs, no forward-looking management commentary, and certainly no judgment about macro conditions.

Who Is Using AI?

Bain reports that 16% of M&A practitioners used generative AI in 2023, rising to 21% in 2025. Among the most active acquirers, adoption is 36%. Dealroom similarly reports 20% adoption, with over half expecting to adopt by 2027.

More broadly, a Bain survey finds 95% of US companies now use generative AI in some form, with average use cases growing from 2.5 to 5 between 2023 and 2024.

Across all industries, McKinsey notes that 78% of organizations use AI in at least one business function, up from 55% just a year prior.

So Let’s Weave This Into the Conceptual Narrative

Let’s start with a real-world example: Centerline, a financial firm, deployed V7 Go for diligence workflows. Within one month, their productivity rose by 35%. That’s not a hypothetical. It’s hours freed, tasks automated.

JPMorgan is investing in AI at scale: $18 billion in tech in 2025, launching an in-house generative AI platform used by over 200,000 employees and building around 100 AI tools. The result? Servicing costs down by 30%, projected 10% reduction in headcount for certain efficiency-related roles, and advisory productivity more than tripled in asset/wealth arms.

In India, RBI reports generative AI could deliver up to 46% efficiency gains in the banking sector.

McKinsey estimates AI could drive $200–$340 billion annually in value for banking—around 5% of industry revenue. Deloitte even predicts up to 35% productivity boost for front-office roles in investment banks by 2026.

Crucially, an MIT study found that 95% of generative AI pilots in enterprises don’t affect P&L, not because AI fails, but due to flawed integration with workflows. So the story isn’t just “AI works,” it’s “integration, or lack thereof, makes or breaks impact.”

The data shows:

AI outperforms humans in directional earnings forecasts (~60% vs ~53%).

Real firms are achieving 35%+ productivity lifts, 30% cost reduction, 3x advisory efficiency.

Adoption is widespread and accelerating—20–36% in front office, nearly universal use in at least one function.

This isn’t speculative. The force multiplier effect is real, and it’s already reshaping pipelines and workflows.

What’s Actually Getting Automated

Look at an entry-level IB analyst’s workflow in M&A. Historically they’d spend 2–3 days just pulling multi-year financial statements from filings, reconciling them across different accounting standards, normalizing currencies, and then slotting them into the firm’s proprietary templates. The analysis part — the thing that gets presented—didn’t even start until that grind was done.

Now? AI can chew through a confidential information memorandum in under an hour, spit out structured tables, and cross-check against internal accounting rules you’ve uploaded into the system. A large language model can ingest the PDF, recognize where the EBITDA adjustments are hiding in the footnotes, standardize it, and output clean Excel-ready data without the intern manually typing a single cell. Accuracy rates can be higher than human first passes, because the system doesn’t get tired and skip a row.

This isn’t a thought experiment. Tools like V7 Go or Cyndx’s Scholar are already doing this. And it’s not just financial statements. Fund reports, investor decks, 10-Qs—all can be run through the pipeline. The AI doesn’t just give you numbers; it matches them to the categories in your internal templates. That’s a week’s worth of manual work collapsed into an afternoon.

So yes, entry-level analysts are exposed. If 70–80% of their job is this kind of data gathering and normalization, that’s exactly the kind of work AI is already eating.

The Skills Pipeline Problem

If you stop there you miss the bigger consequence. Entry-level grunt work was never valuable in itself—it was a training ground. You learned the industry by handling raw, messy data. You got a feel for where companies bury the bad news, for what “adjusted” really means in practice, for why the same EBITDA margin can mean something very different in two industries.

If AI takes away the grind, how do you replace the learning? This is not a rhetorical point. If you hire fewer juniors and the ones you do hire don’t get that slow, repetitive exposure to the raw material, you end up with a generation of mid-levels who are great at interpreting AI output but less good at knowing when the AI is wrong. In finance, where one bad assumption can blow up a deal or a portfolio, that is a problem.

So the C-suite question isn’t “do we cut juniors?” It’s “if we change their work, how do we still build judgment?” That means deliberately structuring workflows so human review isn’t just a formality. Pair the AI’s output with a requirement to trace back every figure to its source. Build anomaly-spotting exercises into onboarding. Treat AI as a force multiplier, not a black box.

Mid-Level Analysts Become Integrators

Mid-levels are already evolving into something closer to “workflow architects.” They still need domain expertise—credit, M&A, portfolio construction—but they also need to understand enough Python or SQL to tweak the data pipeline, enough prompt engineering to adjust what the AI produces, enough model literacy to know when to trust the output and when to re-run it with different constraints.

These aren’t programming jobs, they’re integration jobs. The analyst isn’t writing an LLM from scratch. They’re orchestrating the steps: upload the documents, run the entity extraction, cross-reference with internal benchmarks, push the results into the BI dashboard, annotate the anomalies, then package for the MD. The bottleneck moves from typing to deciding.

In that sense, the mid-level role is actually getting more strategically important. They become the link between raw AI capability and the firm’s decision-making cadence. If they do their job well, the senior team sees insights in days that used to take weeks, with enough context to act on them. If they do it badly, you get speed without accuracy, which is worse than no AI at all.

Senior Analysts and Executives: Oversight, Not Oversimplification

The top layer doesn’t escape change either. When you can get a clean, AI-generated draft of an industry comp set or a transaction model in an afternoon, you can cover more ground. That means you can look at more deals, model more scenarios, engage with more counterparties. The leverage is real.

But the job tilts even harder toward judgment. Which anomalies matter? Which scenarios are worth presenting to the board? How do you interpret a model’s projection when you know it’s built partly on historical data that doesn’t reflect current macro conditions? AI can flag, summarize, and project, but it can’t own the consequences.

The other change is client-facing. Senior people can now walk into a meeting with real-time, AI-updated numbers and tailored insights. That shifts the expectation. Clients don’t just want the quarterly review, they want the “what does this mean for me right now?” answer. If your firm can deliver that consistently, you win. If you can’t, someone else will.

The Workflow Is the Product

This is worth pausing on. The competitive advantage isn’t “we use AI.” It’s “we have a workflow that gets from raw data to decision faster and more reliably than anyone else.” The tech is available to everyone. The integration—how you connect it to your processes, your data, your compliance framework—is not.

That’s why early movers who are building proprietary AI workflows on top of their own data are pulling ahead. They’re not just using generic LLMs; they’re embedding their methodologies, risk models, and sector knowledge into the prompts and the training data. Every time the model runs, it gets better at producing their kind of analysis. That’s a moat.

Tools Don’t Disappear, They Change Role

A quick detour into tools, because I hear this question a lot: does Excel go away? The short answer is no. The long answer is that Excel becomes the front-end workspace that connects to AI and BI systems. It’s still the fastest, most universally understood way to manipulate small-to-medium datasets. It’s also where a lot of final formatting and last-mile calculation happens.

Microsoft is already baking AI into Excel—natural language queries, automated insights, better integration with Power BI and Azure ML. It’s not competing with Python for large-scale modeling or with Tableau for high-end visualization, but it doesn’t have to. It coexists. The analyst of the near future will happily pivot between Excel, a BI dashboard, and an AI chat interface in the course of a single morning.

The more interesting choice for senior leaders is whether to rely entirely on external AI tools or to build internal ones. External gets you speed to adoption. Internal gets you control and the ability to encode your proprietary processes. There’s no single right answer, but the firms that just bolt on external tools without rethinking workflow tend to get the least value.

Governance, Compliance, and Risk

Now the part that doesn’t get enough airtime in the hype cycle: governance. Financial analysis doesn’t happen in a vacuum. You’re operating under AML, KYC, Basel risk standards, SEC rules, FINRA oversight. Every AI tool you deploy has to fit inside that box. That means documenting how it works, testing for bias, and building audit trails.

Bias isn’t just a moral problem, it’s a regulatory one. If your credit model is trained on historical data with gender or racial disparities baked in, you could be making illegal lending decisions without realizing it. Fixing that requires both technical and human oversight—regular audits of training data, monitoring of outputs, and the ability to override the model when needed.

Security is the other piece. You’re dealing with sensitive financial data. If your AI tool runs in the cloud, who has access? How is it encrypted? What’s the vendor’s breach history? These aren’t afterthoughts. They’re part of the decision to deploy at all.

And don’t forget the human side of security. A lot of breaches happen because someone clicks the wrong link or uploads the wrong file. Training your teams on AI security best practices is as important as any technical control.

Strategic Implications for the C-Suite

Pulling it together, there are a few big-picture implications senior leaders should be acting on now.

First, rethink the talent model. You can’t just cut juniors and hope the AI fills the gap. You need to design work so that analysts still develop judgment, even if they’re not spending days in the data trenches. That might mean more rotation between functions, more shadowing of client meetings, more deliberate training on anomaly detection and source validation.

Second, invest in integration. The value isn’t in the tool, it’s in how it fits your existing systems and processes. That includes compliance, data governance, and client-facing workflows. An AI that can’t produce output in the format your risk committee requires is useless, no matter how smart it is.

Third, measure ROI the right way. Don’t just look at hours saved. Track time-to-insight, analyst coverage ratios, error rates, client satisfaction. Those are the metrics that tell you if AI is actually making you more competitive.

Fourth, accept that this is an arms race. The firms that figure out human-AI collaboration fastest will pull ahead. The ones that lag will find their speed, cost structure, and client expectations out of sync with the market.

Where This Leaves Us

I keep coming back to this idea of AI as a force multiplier, not a replacement. The best analysts will use it to cover more ground, test more scenarios, and spend more time in front of clients. The worst will let it replace their thinking and become overconfident in its accuracy.

For senior leaders, the challenge is to steer your teams toward the first outcome and guard against the second. That means culture as much as technology. Make it normal to question the AI’s output. Make it normal to trace numbers back to sources. Make it normal to pair AI speed with human skepticism.

The next few years will be the shakeout. The technology will keep getting better. The question is whether your workflows, your talent model, and your governance keep pace. If they do, you can turn AI from a buzzword into a competitive advantage. If they don’t, you’ll be running the same race as everyone else, just with fancier shoes.